These days, artificial intelligence (AI) seems to be an active ingredient in virtually every conversation about advanced technologies and automation. Given the hyperactivity in the domain, many professionals and business leaders are evaluating the power of AI and machine learning technologies to ensure a competitive edge going into the next decade.

Needless to say, artificial intelligence is a rich field for discovery and understanding. However, without deeper AI training and education, it can be quite challenging to stay abreast of the rapid changes taking place within the field. At Entefy, we’re passionate about breakthrough computing and the many ways it can help people live and work better. So, to help demystify artificial intelligence and its many sub-components, our team has assembled this list of useful terms for anyone interested in AI and machine learning.

Be sure to bookmark this page for a handy quick-reference resource.

Algorithm. A procedure or formula, often mathematical, that defines a sequence of operations to solve a problem or class of problems.

Artificial intelligence (AI). The umbrella term for computer systems that can interpret, analyze, and learn from data in ways similar to human cognition.

Cardinality. In mathematics, a measure of the number of elements present in a set.

Centroid model. A type of classifier that computes the center of mass of each class and uses a distance metric to assign samples to classes during inference.

Chatbot. A computer program (often designed as an AI-powered virtual agent) that provides information or takes actions in response to the user’s voice or text commands or both. Current chatbots are often deployed to provide customer service or support functions.

Class. A category of data indicated by the label of a target attribute.

Classifier. An instance of a machine learning model trained to predict a class.

Class imbalance. The quality of having a non-uniform distribution of samples grouped by target class.

Cognitive computing. A term that describes advanced AI systems that mimic the functioning of the human brain to improve decisionmaking and perform complex tasks.

Computer vision (CV). An artificial intelligence field focused on classifying and contextualizing the content of digital video and images.

Data curation. The process of collecting and managing data, including verification, annotation, and transformation.Also see training and dataset.

Data mining. The process of targeted discovery of information, patterns, or context within one or more data repositories.

DataOps: Management, optimization, and monitoring of data retrieval, storage, transformation, and distribution throughout the data life cycle including preparation, pipelines, and reporting.

Deep learning. A subfield of machine learning that uses artificial neural networks with two or more hidden layers to train a computer to process data, recognize patterns, and make predictions.

Derived feature. A feature that is created and the value of which is set as a result of observations on a given dataset, generally as a result of classification, automated preprocessing, or sequenced model output.

Ensembling. A powerful technique whereby two or more algorithms, models, or neural networks are combined in order to generate more accurate predictions.

F1 Score. A measure of a test’s accuracy calculated as the harmonic mean of precision and recall.

Feature. In ML, a specific variable or measurable value that is used as input to an algorithm.

Generative adversarial network (GAN). A class of AI algorithms whereby two neural networks compete against each other to improve capabilities and become stronger.

Hyperparameter. In ML, a parameter whose value is set prior to the learning process as opposed to other values derived by virtue of training.

Intelligent process automation (IPA). A collection of technologies, including robotic process automation (RPA) and AI, to help automate certain digital processes. Also see robotic process automation (RPA).

Logistic regression. A type of classifier that measures the relationship between one variable and one or more variables using a logistic function.

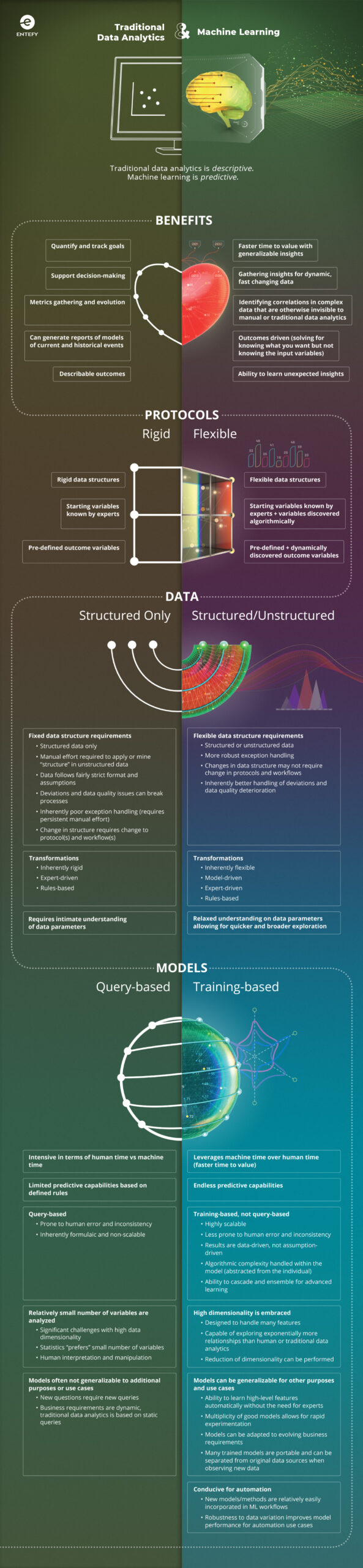

Machine learning (ML). A subset of artificial intelligence that gives machines the ability to analyze a set of data, draw conclusions about the data, and then make predictions when presented with new data without being explicitly programmed to do so.

MIMI. The term used to refer to Entefy’s multimodal AI platform and technology.

Multimodal AI. Machine learning models that analyze and relate data processed using multiple modes or formats of learning.

N-gram model. In NLP, a model that counts the frequency of all contiguous sequences of [1, n] tokens.

Naive Bayes. A probabilistic classifier based on applying Bayes Rule which makes strong (naive) assumptions about the independence of features.

Named entity recognition (NER). An NLP model that locates and classifies elements in text into pre-defined categories.

Natural language processing (NLP). A field of computer science and artificial intelligence focused on processing and analyzing natural human language or text data.

Natural language understanding (NLU). A specialty area within Natural Language Processing focused on advanced analysis of text to extract meaning and context.

Neural networks. A specific technique for doing machine learning that is inspired by the neural connections of the human brain. The intelligence comes from the ability to analyze countless data inputs to discover context and meaning.

Ontology. A data model that represents relationships between concepts, events, entities, or other categories. In the AI context, ontologies are often used by AI systems to analyze, share, or reuse knowledge.

Precision. In machine learning, a measure of accuracy computing the ratio of true positives against all true and false positives in a given class.

Primary feature. A feature, the value of which is present in or derived from a dataset directly.

Random forest. An ensemble machine learning method that blends the output of multiple decision trees in order to produce improved results.

Recall. In machine learning, a measure of accuracy computing the ratio of true positives guessed against all actual positives in a given class.

Reinforcement learning (RL). A machine learning technique where an agent learns independently the rules of a system via trial-and-error sequences.

Robotic process automation (RPA). Business process automation that uses virtual software robots (not physical) to observe the user’s low-level or monotonous tasks performed using an application’s user interface in order to automate those tasks. Also see intelligent process automation (IPA).

Self-supervised learning. Autonomous Supervised Learning, whereby a system identifies and extracts naturally-available signal from unlabeled data through processes of self-selection.

Semi-supervised learning. A machine learning technique that fits between supervised learning (in which data used for training is labeled) and unsupervised learning (in which data used for training is unlabeled).

Strong AI. Theterm used to describe artificial general intelligence or a machine’s intelligence functionality that matches human cognitive capabilities across multiple domains. Often characterized by self-improvement mechanisms and generalization rather than specific training to perform in narrow domains. Also see weak AI.

Structured data. Data that has been organized using a predetermined model, often in the form of a table with values and linked relationships. Also see unstructured data.

Supervised learning. A machine learning technique that infers from training performed on labeled data. Also see unsupervised learning.

Taxonomy. A hierarchal structured list of terms to illustrate the relationship between those terms. Also see ontology.

Time series. A set of data structured in spaced units of time.

Training. The process of providing a dataset to a machine learning model for the purpose of improving the precision or effectiveness of the model. Also see supervised learning and unsupervised learning.

Transfer learning. A machine learning technique where the knowledge derived from solving one problem is applied to a different (typically related) problem.

Tuning. The process of optimizing the hyperparameters of an AI algorithm to improve its precision or effectiveness. Also see algorithm.

Unstructured data. Data that has not been organized with a predetermined order or structure, often making it difficult for computer systems to process and analyze.

Unsupervised learning. A machine learning technique that infers from training performed on unlabeled data. Also see supervised learning.

Vectorization. The process of transforming data into vector representation using numbers.

Weak AI. Theterm used to describe a narrow AI built and trained for a specific task. Also see strong AI.

Word Embedding. In NLP, the vectorization of words and phrases.