In the early days of the Internet, cybersecurity wasn’t the hot topic it is now. It was more of a nuisance or an inconvenient prank. Back then, the web was still experimental and more of a side hustle for a relatively narrow, techie segment of the population. Nothing even remotely close to the bloodline it is for modern society today. Cyber threats and malware were something that could be easily mitigated with basic tools or system upgrades. But as technology grew smarter and more dependent on the Internet, it became more vulnerable. Today, cloud, mobile, IoT technologies, as well as endless applications, software frameworks, libraries, and widgets, serve as a veritable buffet of access points for hackers to exploit. As a result, cyberattacks are now significantly more widespread and can damage more than just your computer. The acceleration of digital trends due to the COVID-19 pandemic has only exacerbated the problem.

What is malware

Malware, or malicious software, is a general industry term that covers a slew of cybersecurity threats. Hackers use malware and other sophisticated techniques (including phishing and social-engineering-based attacks) to infiltrate and cause damage to your computing devices and applications, or simply steal valuable data. Malware includes common threats such as ransomware, viruses, adware, and spyware as well as, the lesser known threats, such as trojan horses, worms, or crypto jacking. Cybercriminals hack to control data and systems for money, to make political statements, or just for fun.

Today, cybersecurity represents real risk to consumers, corporations, and governments virtually everywhere. The reality is that most systems are not yet capable of identifying or protecting against all cyber threats, and those threats are only rising in volume and sophistication.

Examples of cybersecurity attacks

Cyberattacks in recent years have risen at an alarming rate. There are too many examples of significant exploits to list here. However, the Center for Strategic & International Studies (CSIS) provides a 12-month summary illustrating the noticeable surge in significant cyberattacks, ranging from DDoS attacks to ransomware targeting health care databases, banking networks, military communications, and much more.

All manner of information is at risk in these sorts of attacks, including personally identifiable information, confidential corporate data, and government secrets and classified data. For instance, in February 2022, “a U.N. report claimed that North Korea hackers stole more than $50 million between 2020 and mid-2021 from three cryptocurrency exchanges. The report also added that in 2021 that amount likely increased, as the DPRK launched 7 attacks on cryptocurrency platforms to help fund their nuclear program in the face of a significant sanctions regime.”

Another example, is an exploitation in July 2021 by Russian hackers who “exploited a vulnerability in Kaseya’s virtual systems/server administrator (VSA) software allowing them to deploy a ransomware attack on the network. The hack affected around 1,500 small and midsized businesses, with attackers asking for $70 million in payment.”

Living in a digitally-interconnected world also means growing vulnerability for physical assets. Last year, you may recall hearing about the largest American fuel pipeline, the Colonial Pipeline, being the recipient of a targeted ransomeware attack. In May 2021, “the energy company shut down the pipeline and later paid a $5 million ransom. The attack is attributed to DarkSide, a Russian speaking hacking group.”

Cybersecurity is a matter of national (and international) security

As a newer operational component of the Department of Homeland Security, the Cybersecurity & Infrastructure Security Agency (CISA) in the U.S. “leads the national effort to understand, manage, and reduce risk to our cyber and physical infrastructure.” Their work helps “ensure a secure and resilient infrastructure for the American people.” CISA was established in 2018 and has since issued a number of emergency directives to help protect information systems. One recent example is the Emergency Directive 22-02 aimed at the Apache Log4J vulnerability which threatened the global computer network. In December 2021, CISA concluded that Log4J security vulnerability posed “an unacceptable risk to Federal Civilian Executive Branch agencies and requires emergency action.”

In the private sector, “88% of boards now view cybersecurity as a business risk.” Historically, protection against cyber threats meant overreliance on traditional rules-based software and human monitoring efforts. Unfortunately, those traditional approaches to cybersecurity are failing the modern enterprise. This is due to several factors that frankly, in combination, have surpassed our human ability to effectively manage. These factors include the sheer volume of digital activity, the growing number of unguarded vulnerabilities in computer systems (including IoT, BYOD, and other smart devices), code bases, operational processes, the divergence of the two “technospheres” (Chinese and Western), the apparent rise in the number of cybercriminals, and the novel approaches used by hackers to stay one step ahead of their victims.

Costs of cybercrimes

Security analysts and experts are responsible for hunting down and eliminating potential security threats. But this is tedious and often strenuous work, involving massive sets of complex data, with plenty of opportunity for false flags and threats that can go undetected.

When critical cyber breaches are found, the remediation efforts can take 205 days, on average. The shortcomings of current cybersecurity, coupled with the dramatic rise in cyberattacks translate into significant costs. “Cybercrime costs the global economy about $445 billion every year, with the damage to business from theft of intellectual property exceeding the $160 billion loss to individuals.”

Cybercrimes can cause physical harm too. This “shifts the conversation from business disruption to physical harm with liability likely ending with the CEO.” By 2025, Gartner expects cybercriminals to “have weaponized operational technology environments successfully enough to cause human casualties.” Further, it is expected that by 2024, the security incidents related to cyber-physical systems (CPSs) will create personal liablity for 75% of CEOs. And, without taking into account “the actual value of a human life into the equation,” by 2023, CPS attacks are predicted to create a financial impact in excess of $50 billion.

Modern countermeasures include zero trust systems, artificial intelligence, and automation

The key to effective cybersecurity is working smarter, not harder. And working smarter in cybersecurity requires a march toward Zero Trust Architecture and autonomous cyber, powered by AI and intelligent automation. Modernizing systems with the zero trust security paradigm and machine intelligence represents powerful countermeasures to cyberattacks.

The White House describes ‘Zero Trust Architecture’ in the following way:

“A security model, a set of system design principles, and a coordinated cybersecurity and system management strategy based on an acknowledgement that threats exist both inside and outside traditional network boundaries. The Zero Trust security model eliminates implicit trust in any one element, node, or service and instead requires continuous verification of the operational picture via real-time information from multiple sources to determine access and other system responses. In essence, a Zero Trust Architecture allows users full access but only to the bare minimum they need to perform their jobs. If a device is compromised, zero trust can ensure that the damage is contained. The Zero Trust Architecture security model assumes that a breach is inevitable or has likely already occurred, so it constantly limits access to only what is needed and looks for anomalous or malicious activity. Zero Trust Architecture embeds comprehensive security monitoring; granular risk-based access controls; and system security automation in a coordinated manner throughout all aspects of the infrastructure in order to focus on protecting data in real-time within a dynamic threat environment. This data-centric security model allows the concept of least-privileged access to be applied for every access decision, where the answers to the questions of who, what, when, where, and how are critical for appropriately allowing or denying access to resources based on the combination of sever.”

AI’s most significant capability is robust, lightning fast data analysis that can learn in much greater volume and in less time than human security analysts ever could. Further, with the right compute infrastructure, artificial intelligence can be at work around the clock without fatigue, analyzing trends and learning new patterns. As a security measure, AI has already been implemented in some small ways such as the ability to scan and process biometrics in mainstream smartphones or advanced analysis of vulnerability databases. But, as advanced technology adoption accelerates, autonomous cyber is likely to provide the highest level of protection against cyberattacks. It will add resiliency to critical infrastructure and global computer networks via sophisticated algorithms and intelligently orchestrated automation that can not only better detect threats but can also take corrective actions to mitigate risks. Actively spotting errors in security systems and automatic “self-healing” by patching them in real time, significantly reduces remediation time.

Conclusion

Accelerating digital trends and cyber threats have elevated cybersecurity to a priority topic for corporations and governments alike. Technology and business leaders are recognizing the limitations of their organizations’ legacy systems and processes. There is growing community consciousness about the notion that, in light of the ever-evolving threat vectors, static and rules-based approaches are destined to fail. Augmenting cybersecurity systems with AI and automation can mitigate risks and speed up an otherwise time-consuming and costly process by identifying breaches in security before the damage becomes too widespread.

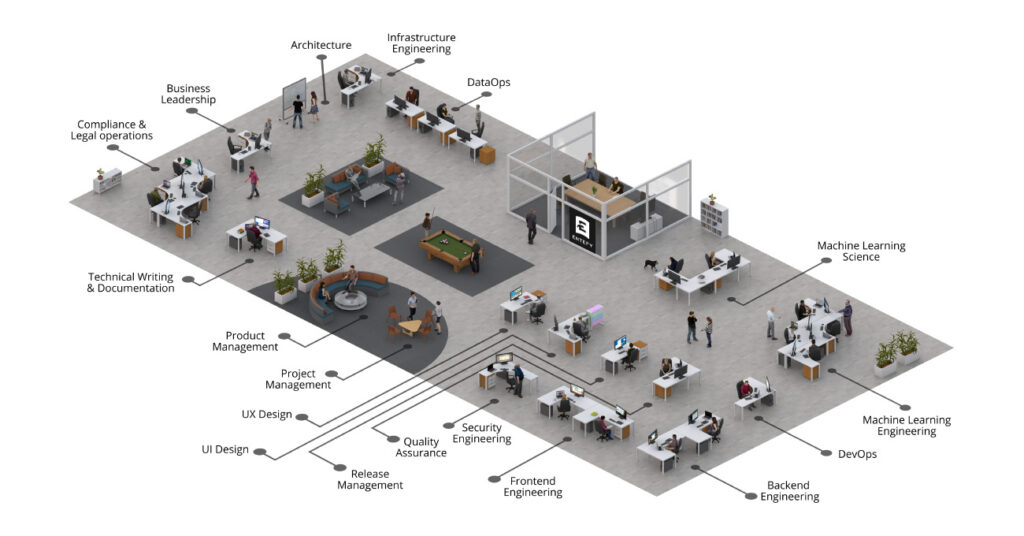

Is your enterprise prepared for what’s next in cybersecurity, artificial intelligence, and automation? Begin your enterprise AI Journey and read about the 18 skills needed to materialize your AI initiative, from ideation to production implementation.