When it comes to artificial intelligence (AI), the path from ideation to implementation can be a long and technical one. Hiring the right talent. Wrangling data. Setting up the right infrastructure. Experimenting with models. Navigating the IP landscape. Waiting for results. More experimenting. More waiting. Enterprise AI implementation can be one of the most complex initiatives for IT and business teams today. And that’s before acknowledging the time pressure created by fast-moving markets and competitors.

As an AI software and automation company, Entefy works with private and public companies both as an advanced technology provider and an innovation advisor. This has given our team a unique perspective on what it takes to successfully bring AI to life. Our experience has taught us how to avoid major missteps organizations often make when developing or adopting AI and machine learning (ML) capabilities. These 5 missteps can derail productivity and growth at any organization:

Unrealistic expectations

“I hear that AI is the future. So let’s build some algorithms, point them at our data, and we should be awash in new insights by the end of the week.” Success with AI begins with adopting a more nuanced understanding of what artificial intelligence can and can’t do. AI and machine learning can indeed create remarkable new insights and capabilities. But you need to align your expectations with reality. This isn’t a new lesson. Enterprise-scale software deployments demonstrate this idea time and again. Software itself isn’t magic. The magic emerges from forward thinking application design and the effective integration of new capabilities into business processes. Think about your AI program in the same way, and you’ll be on the path to long-term success.

Expecting AI to instantly deliver transformative capabilities across your entire organization is unreasonable. Over the short term, narrowly defined projects can indeed be quickly deployed to deliver impressive impact. But to expect spontaneous intelligence to spring to life immediately can lead to missed expectations. The best way to view AI today is to focus on the “learning” in machine learning, not the “intelligence” in artificial intelligence.

Development of advanced AI/ML systems is experimental in nature. Algorithmic tuning (iterative improvement) can be time-intensive and can cause unanticipated delays along the way. Equally time intensive is the process of data curation, a key pre-requisite step to prepare for training. Because of this, precise cost and ROI projections are difficult to ascertain upfront.

Short-term tactics without long-term planning

It is easy to fall in love with AI. So it’s a common mistake to prioritize technology over goals and outcomes. Symptoms include approaching every problem with a popular technique or over-relying on one tool or framework. Instead, invest time in identifying needs and priorities before moving on to AI vendor selection or development planning.

Approach an AI project with a defined end goal. What problem are we solving? Ensure you have a clear understanding of the potential benefits as well as the impact to the existing business process. Turn to technology considerations only after you have a clear understanding of the problem you want to solve.

To better understand the limitations of AI, start by looking at information silos. The type of silos that result from teams, departments, and divisions storing information in isolation from one another. This limits access to critical knowledge and creates issues around data availability and integrity. The root cause is prioritizing short-term needs over long-term interoperability. With AI, this happens when companies develop multiple narrowly-scoped expert systems that can’t be leveraged to solve other business problems. Working with AI providers who offer diverse intelligence capabilities can go a long way to avoiding AI silos and, over time, increasing ROI.

Long-term planning should also consider compliance and legislation, especially as they pertain to data privacy. Without guidelines for sourcing, training, and using data, organizations risk violating privacy rules and regulations. The global reach of the EU General Data Protection Regulation (GDPR) law, combined with the growing trend toward data privacy legislation in the U.S., makes the treatment of data more complex and important than ever. Don’t let short-term considerations impede your long-term compliance obligations under these laws.

Model mania

The first rule of AI modeling is to resist the urge of jumping straight into code and algorithms until the identification of goals and intended results. After that, you can begin evaluating how to leverage specific models and frameworks with purpose. For example, starting with the idea that deep learning is going to “work magic” is the proverbial cart before the horse. You need a destination before any effective decisions can be made.

There is a lot of misinformation around which AI methods work best for specific use cases or industries. Deep learning doesn’t always outperform classical machine learning. Or, industry-specific AI will not necessarily give you the best results. So try your best to be results driven, not method driven. And don’t let trends influence that decision. For example, neural networks have been going in and out of style for decades, ever since researchers first proposed them in 1944.

When making decisions about model selection, it’s necessary to consider 3 key factors—time, compute, and performance. A sophisticated deep learning approach may yield high probability results, but it does so relying on often costly CPU/GPU horsepower. Different algorithms have different costs and benefits in these areas.

The lesson is simple: A specific machine learning technique is either effective for achieving your specific goal, or it is not. When a particular approach works in the context of one problem, it’s natural to prioritize that approach when tackling the next problem. Random decision forests, for instance, are powerful and flexible algorithms that can be broadly applied to many problems. But resist settling into a comfort zone and know that AI success comes from frequent and ongoing experimentation.

Data considerations

Data matters. Good data can’t fix a bad model, but bad data can ruin a good model. It is a myth that AI/ML success requires massive datasets. In practice, data quality is often more likely to determine the success of your project. The challenge is two-fold. First, it’s necessary to understand how the structure of your data relates to your overarching goal. Second, the value of proper modeling can’t be understated, no matter how large the dataset.

Be sure to consider the 4 Vs of data to ensure success in advanced AI initiatives. The 4Vs include data volume, variety, velocity, and veracity. The road from data to insights can be patchy and long, requiring many types of expertise. Dealing with the 4 Vs early in the exploration process can help accelerate discovery and unlock otherwise hidden value.

The successful preparation and processing of data is a highly complex exercise in multi-dimensional chess—every consideration is connected to multiple other considerations. Common issues entail: Ineffective pre-processing of data; trying to simplify the data with too strong a dimensionality reduction; excessive data wrangling; poorly annotated datasets. And there’s no single best practice. Data curation at its core is problem-specific.

Underestimating the human element

Large-scale rollouts often fail due to a range of human factors. Users aren’t properly trained. Features don’t enhance existing workflows. UI/UX is confusing. The company culture isn’t AI forward.

Full realization of the benefits of AI starts with an empowered, educated workforce. Best practices for this include training strategies centered around continuous improvement in organization-wide technical training as well as leadership development for key champions of the project.

When it comes to hiring AI/ML talent, the situation on the ground is sobering for any organization with ambitions to rapidly scale internal AI capabilities. Stories of newly minted machine learning graduates fetching steep salaries are real, as practically every large company on the planet drives up demand for a limited pool of qualified candidates. Then there’s the reality of ML resume inflation, where some job seekers add machine learning credentials without the necessary skills or experience to deliver real value.

Traditional software system development follows the plan-design-build-test-deploy framework. AI development follows a slightly different path due to its experimental nature. Much time and effort is needed to identify and curate the right datasets, train models, and optimize model performance. Ensure your technical and business teams align on these differences and that they have the required skills to remain productive in this new environment.

Conclusion

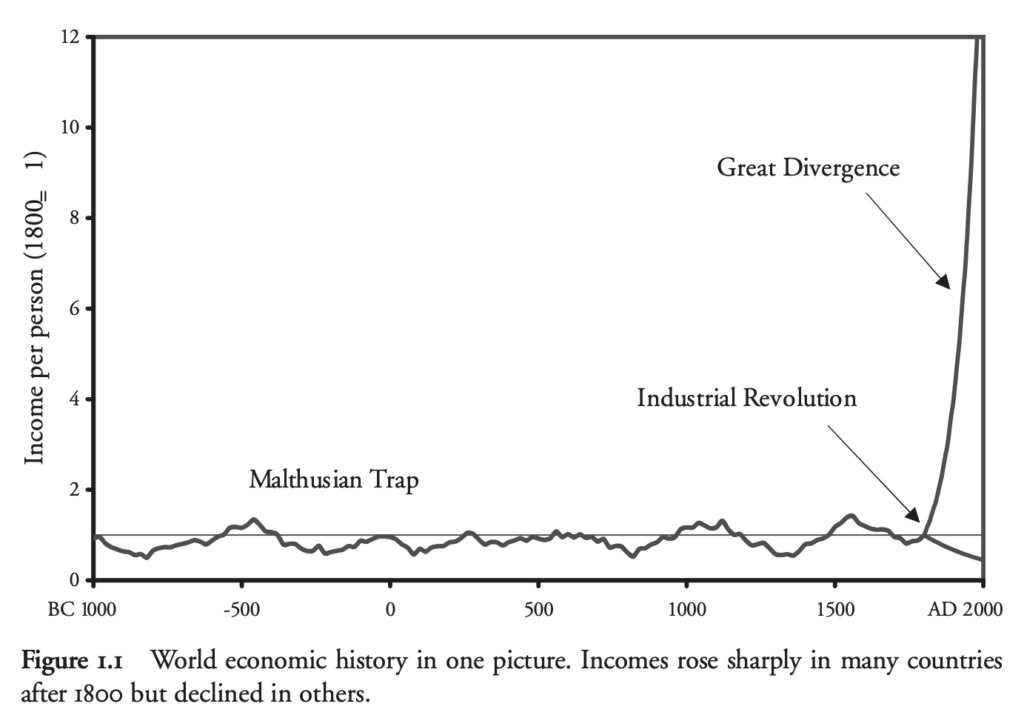

There are countless parallels between the early adoption of enterprise software decades ago and the rollout of AI and machine learning today. In both cases, organizations have faced pressure to leverage the power of new capabilities quickly and effectively. The path to success is complex and fraught with pitfalls, covering everything from personnel training to thoughtfully scripted rollouts.

The lesson of that earlier time was this: After all of the strategic and tactical wrinkles of software implementation were addressed, the new solutions did indeed make a significant impact on people and their organizations. The AI story is no different. Deploying intelligence capabilities can be challenging but the competitive advantages they confer are transformational.