You click through to a dated-looking website. A video begins to play with the mysterious title “Real-time Facial Reenactment.” In the video, a tabletop TV shows cable news network footage of President George W. Bush. Beside the TV is a young man seated in front of a webcam, stretching his mouth and arching his eyebrows dramatically like a cartoon character. And then it clicks. The President is making the exact same facial movements as the young man, perfectly mirroring his exaggerated expressions. The seated figure is, to all appearances, controlling a President of the United States. Or at least his image on a video screen. It’s worth checking out the video to see this digital puppetry in action, with 4 million views and counting.

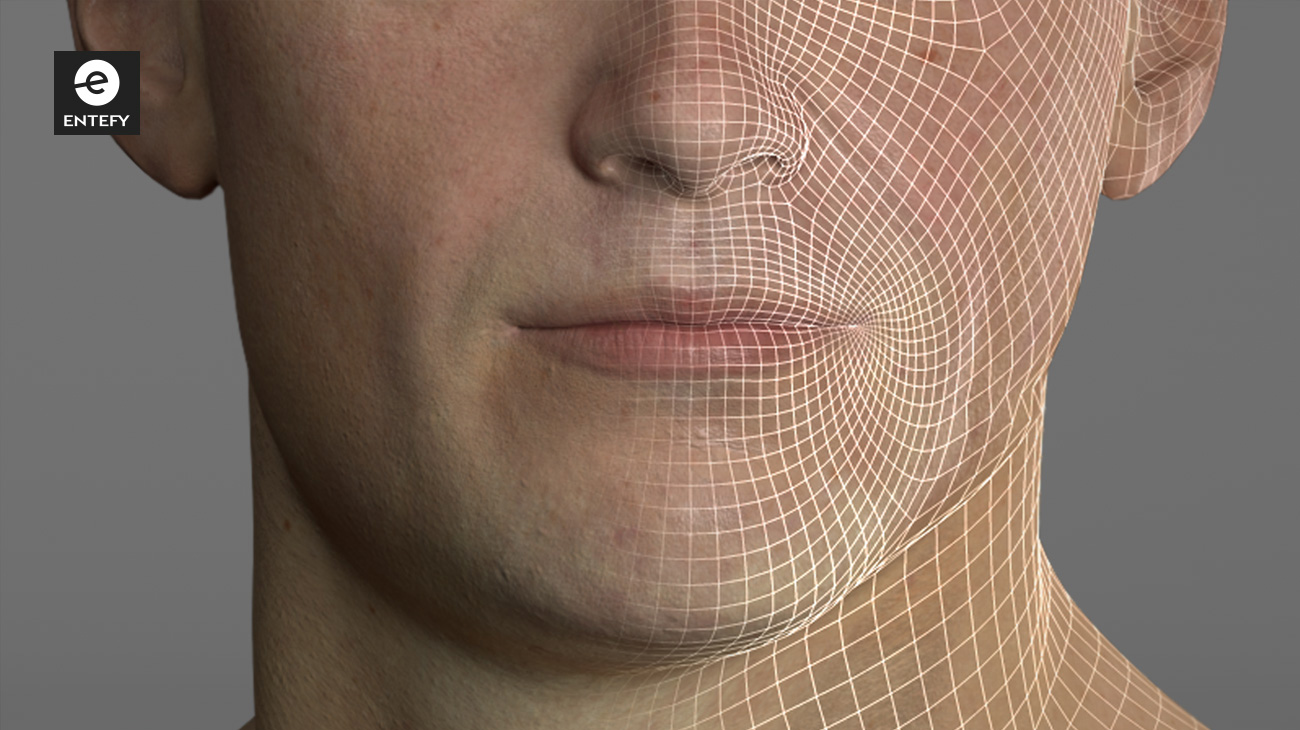

Welcome to the 21st century version of “seeing is believing.” The video in question is the product of a group of university researchers working in a specialty field of artificial intelligence called computer vision. Computer vision algorithms can do things like digitally map one face onto another in real-time, allowing anyone with a webcam to project their own facial expressions onto a video image of a second person. No special media or expensive technology is required. The researchers used an off-the-shelf webcam and publicly available YouTube videos of American presidents and celebrities.

AI-powered computer vision technologies that manipulate video can be impressively realistic, often eerie, and just the sort of thing that should ignite discussions of authenticity in the age of “fake news.”

Photoshop, the verb

The photo editing software Photoshop has been around so long at this point that we use it as a verb. But AI computer vision technologies with the power to manipulate what we see are something else entirely. After all, it’s one thing to airbrush a model in an advertisement and quite another to manipulate what’s said on the nightly newscast.

To see why, consider a few facts. Billions of people worldwide, including 2 out of 3 Americans, rely on social media and other digital sources for news. And in doing so, they overwhelmingly choose video as their preferred format. These informational ecosystems are already struggling with “fake news,” the term for deliberate disinformation masquerading as legitimate reporting.

If AI computer vision enables low-cost, highly convincing tools for manipulating video—for making anyone appear to say anything someone might want them to say—those tools have an extremely high potential for mayhem, or worse. After all, once we see something, we can’t unsee it. The brain won’t let us. Even if later we learn that what we saw was fake, that first impression remains.

AI raises some big questions, and they’re important ones. What happens when we can no longer rely on “seeing is believing?” How can we ensure reliable authenticity in the digital age? Technology is moving fast, and new AI tools remind us that sometimes we need to put aside the cool factor and think instead about how specific technologies impact our lives. Because digital technology is at its best when it improves lives and empowers people.

Putting the dead to work

Part of the reason that AI computer vision technology is so remarkable is that it compresses hours or days of professional work into something that happens in the moment.

The technology has a clear application in entertainment. The production of the immersive worlds and intricate characters we see in film and video games is quite expensive. CGI (computer-generated imagery) is lauded for its ability to make the unreal appear as close to real as possible, to turn imagination into reality. But there is a lot of effort behind these transformations.

CGI may fill theaters, but we’ve also seen that people place limits on the type of unreality they’ll accept. Look no further than Rogue One, the 2016 film set in George Lucas’ Star Wars universe. The film’s story takes place before the events of the original Star Wars film from 1977. The Rogue One filmmakers wanted to feature one of the characters from the original film but ran into a small problem: the actor, Peter Cushing, died in the 1990’s.

CGI to the rescue. The producers employed computer graphics to generate a digital version of Cushing, then brought him to simulated life using motion capture gear and another actor, Guy Henry, to voice Cushing’s character. The result was a rather convincing resurrection of Cushing, which simulated everything from his facial tics to the differences in lighting between films.

If you’ve seen the film, you probably remember this character. Because watching a dead actor brought back to life…isn’t quite right. And not surprisingly the film attracted critics who raised ethical concerns about the dignity of the dead and the right to use a deceased actor’s likeness. It was exactly the feeling of unease we talk about when we talk about the “uncanny valley.”

Rogue One wasn’t the first digital resurrection to raise these concerns. Other reanimations have met with mixed responses from the public. There was Tupac’s holographic performance at Coachella. And Marilyn Monroe, John Wayne, and Steve McQueen posthumously pitching products in commercials. Despite the public’s unease, more than $3 billion is spent annually on marketing and licensing deceased celebrities for advertisements. But none of this suggests that we’re ready to give up “seeing is believing” just yet.

Authenticity is timeless

The invention of Photoshop didn’t cause people to completely distrust every digital photo. We still happily share pictures from vacations and selfies with celebrities without worry that our friends will doubt their authenticity.

The challenge with AI computer vision tech isn’t how it might be misused—after all, practically any technology can be misused. It’s that it joins a growing list of technologies that are developing so quickly that people haven’t had enough time to collectively decide how we want them to be used. This is as true about some forms of AI as it is about robotics and gene editing.

But if we did come together to have this discussion, what we’re likely to find is a lot of common ground around the idea that the best way to use all of these revolutionary technologies is to make life better for people, solve global problems, and empower individuals. After all, for every potential misuse of computer vision AI, there are hundreds of positive and impactful applications like real-time monitoring of crime, improved disaster response, automated medical diagnosis, and on and on.

Fake news is a problem worth solving. But until we’ve successfully leveraged advanced technology in support of truth and authenticity, let’s not abandon “seeing is believing” just yet.