Imagine being able to set the perfect price, instantly, for every customer, every product, and every market condition. With artificial intelligence (AI), that vision is rapidly becoming reality. Dynamic pricing powered by advanced AI is allowing companies to boost revenue and stay ahead of competition.

Today’s retail landscape is more unpredictable than ever. With rising costs, unstable supply chains, ever [constantly] evolving consumer preferences, and relentless pricing pressure from competitors, traditional pricing methods are reaching their limits. The old playbook, based on rigid rules and static models, simply can’t keep up.

To remain competitive, retailers are shifting to AI-powered pricing systems that thrive on complexity. These modern solutions analyze real-time data across countless variables, allowing businesses to react instantly and intelligently to changing market conditions. Instead of being overwhelmed by volatility, retailers can now use it to their advantage. The companies which have made the leap are already seeing tangible benefits including improved revenue performance and gross profit increases of 5% to 10%.

AI-powered dynamic pricing is ushering in a new era of precision-led revenue strategy, where profitability, personalization, and timing are aligned to market realities.

Understanding dynamic pricing

Dynamic pricing is a strategy where the price of a product or service is constantly adjusted in response to real-time changes to market conditions, demand, competitor pricing, and customer behavior. Rather than keeping prices fixed, businesses using dynamic pricing rely on algorithms and data analysis to make informed decisions, reflecting the changing environment. This approach allows companies to tailor prices to specific customer segments, or even individual buyers, considering factors such as time of day, location, seasonality, and product availability.

Industries such as retail (including e-commerce), hospitality, transportation, logistics, and entertainment, use dynamic pricing. By adopting this strategy, businesses can maximize revenue, improve profit margins, and respond more effectively to market fluctuations. Advanced machine intelligence plays a key role in making dynamic pricing a powerful tool for companies aiming to stay competitive and agile in rapidly changing markets. “The speed, sophistication, and scale of AI-based tools can boost EBITDA by 2 to 5 percentage points when B2B and B2C companies use them to improve aspects of pricing that have the greatest leverage within their organizations.”

What once required weeks of analysis and executive debate can now be executed in milliseconds. Each industry brings its own complexities, but all share the common need to move faster, personalize more deeply, and compete more intelligently.

Evolution from static to intelligent pricing

For decades, many businesses have relied on static pricing models which in essence set prices based on historical data, fixed rules, or gut feelings. While this approach was often sufficient in slower-moving markets, it falls short in today’s volatile and increasingly competitive environment. Traditional static pricing lacks flexibility and fails to account for the many changes that occur inside or outside of a company. As a result, businesses miss opportunities to optimize revenue and risk alienating customers with prices that don’t reflect current market realities. In highly competitive domains such as retail and travel, these outdated methods can lead to lost sales, decreased margins, and diminished market share. Thus, the need for intelligent, dynamic pricing.

Dynamic pricing leverages AI and machine learning to continuously analyze vast amounts of data, from customer behavior and inventory levels to competitor prices and broader market trends. Unlike static models, AI-driven systems can optimize for various business goals. These technologies sift through patterns and signals invisible to humans, enabling businesses to make data-backed pricing decisions instantly. Real-time analytics mixed with predictive modeling means pricing can be adjusted reactively or proactively.

Key advantages of the dynamic pricing approach

Aligning profitability with market realities. One of the most compelling advantages of AI-powered pricing is the ability to enhance profitability while staying grounded in market conditions. By accurately assessing demand elasticity and competitor pricing, AI models recommend prices that capture the maximum willingness to pay without deterring customers. Industries such as airlines have long used dynamic pricing to boost revenue by adjusting fares based on booking windows and seat availability. Similarly, e-commerce platforms dynamically alter prices throughout the day in response to competitors and inventory levels, capturing additional sales and margin. These examples underscore how AI transforms pricing from a guesswork exercise into a precise, market-aligned revenue lever.

Personalization and tailoring prices to customers. Beyond maximizing revenue, AI-powered pricing opens the door to personalized pricing strategies that cater to individual customer preferences and behaviors. By analyzing purchase history, browsing patterns, and demographic data, businesses can offer prices or discounts that resonate specifically with different customer segments. This personalization not only drives sales but also enhances the customer experience by making offers feel relevant and fair. However, it is crucial for companies to balance personalization with transparency to maintain trust and avoid perceptions of unfairness. Ethical considerations, such as ensuring data privacy and equitable pricing, are important as personalized pricing becomes more widespread. By offering competitive and fair pricing based on demand, businesses can attract new customers and build trust. Personalized pricing strategies, tailored to individual customer preferences, can also enhance brand perception and loyalty, leading to repeat purchases and positive word-of-mouth.

Real-Time price optimizations. In dynamic markets, timing can make or break a sale. AI-driven pricing models continuously monitor signals, from inventory changes to competitor moves, and adjust prices instantly. This responsiveness allows businesses to capitalize on short-lived demand surges or strategically discount excess stock before it loses value. For example, ride-sharing services use surge pricing algorithms to increase fares during peak demand, balancing supply and demand efficiently. Retailers can similarly implement flash sales triggered by data-driven insights. The ability to optimize pricing in real time ensures companies stay competitive, agile, and profitable regardless of market volatility.

Enhanced competitiveness. Dynamic pricing acts as a crucial lever for companies seeking to sharpen their competitive edge. This paradigm is about more than just fluctuating prices; it’s a strategic approach that empowers businesses to outperform competitors by staying agile and responsive. This agility is particularly valuable in fast-paced markets where conditions can shift rapidly. Instead of being tied to static pricing that can quickly become outdated, companies employing dynamic pricing can proactively adjust their prices to remain appealing to customers and outmaneuver rivals. For example, an e-commerce retailer utilizing dynamic pricing can leverage software to match or even beat competitor prices, ensuring they remain competitive in a crowded marketplace. They can also use dynamic pricing to capitalize on fluctuations in demand, such as increasing prices during peak seasons or when competitors are experiencing inventory shortages. This allows them to maximize revenue and attract customers who are willing to pay a premium for immediate availability.

Risk Mitigation. By adjusting prices to reflect immediate market conditions, businesses can reduce the risk of losing money on products that become obsolete or outdated. For instance, companies dealing with perishable goods and services can use dynamic pricing to lower prices on items nearing their expiration date, reducing waste and maximizing revenue. By incorporating robust data analysis and algorithms, businesses can make more informed decisions, minimizing the potential for pricing errors and unintended financial consequences.

Examples of AI-driven pricing strategies

Dynamic pricing is not a one-size-fits-all solution. Its application varies widely depending on industry and individual business needs. Below are several industry-specific examples showing how impactful intelligent pricing is being deployed.

1. Retail

In the retail world, a host of contextual variables including shopper behavior, historical sales data, competitor moves, inventory levels, and even weather forecasts, can be fed to algorithms that recommend or autonomously enact price changes with greater precision than antiquated manual methods.

A promising advancement in this space is the application of reinforcement learning, specifically Q-learning, which adapts continuously based on instant feedback. This method outperformed traditional pricing strategies by learning to optimize price points that maximize long-term revenue rather than short-term margins. Reinforcement learning’s key advantage is its ability to evolve without needing predefined pricing rules. This is a critical capability in retail’s fast-changing markets.

Physical retailers approach in-store dynamic pricing by replacing rigid, manual processes with localized, and algorithmically optimized pricing strategies. Implementations typically begin with integrating AI models into the retailer’s POS, ERP, and pricing systems to enable real-time visibility across the store network. Machine learning models process both internal and external data to forecast demand, identify pricing opportunities, and generate optimized recommendations down to the SKU and store levels. Retailers then execute these recommendations through systems such as electronic shelf labels (ESLs) or centralized price management platforms, allowing for seamless updates without manual intervention.

To ensure strategic control within AI systems, retailers can also set business rules and constraints including margin floors, brand pricing limits, and category-level pricing architecture. Some even layer in loyalty and behavioral data to enable customer-segment-specific promotions or tailored pricing responses. Ultimately, the impact can be measured by increased margin, faster inventory turnover, reduced overstock and markdown dependency, as well as improved pricing agility and customer satisfaction levels across the physical store footprint.

2. Real Estate

Dynamic pricing is increasingly becoming a strategic tool in the real estate industry, particularly in the rental segments, where demand can fluctuate regularly and revenue optimization is a priority. Traditionally, property owners and managers set rental rates based on static factors—comparable listings, historical performance, seasonal assumptions. However, with the integration of machine learning and automation, the industry is shifting toward more responsive, dynamic pricing models that reflect market conditions with far greater precision.

Short-term rentals. Managers of rental properties or hosts of vacation homes use AI to automatically adjust nightly rates based on demand signals. These systems factor in variables such as local events, seasonality, day-of-week trends, booking windows, and competitive listings in the area. The goal is to maximize occupancy during low-demand periods and capitalize on revenue during peak times. This strategy often results in significantly higher annual revenue compared to manually set rates.

Long-term and multifamily rentals. Property owners and real estate management firms can optimize lease rates and improve portfolio performance using dynamic pricing. These entities can monitor leasing velocity, unit-specific demand, local competition, and market saturation to inform instant rent adjustments. Dynamic pricing helps strike a balance between occupancy and revenue, playing a role in renewal pricing, ensuring that lease extensions are competitively priced based on both market trends and individual tenant profiles.

Commercial real estate. Select areas of commercial real estate, particularly coworking spaces and flexible office providers, are experimenting with usage-based models. Companies in these fields use dynamic pricing to adjust desk and private office rates based on availability, location desirability, and seasonal demand. In some retail leasing arrangements, landlords are exploring variable pricing models that tie rent to performance metrics such as foot traffic or sales volume, creating more flexible and incentive-aligned agreements.

Overall, dynamic pricing is ushering in a more intelligent and responsive era in real estate management. As AI and data integration become more widespread, these pricing strategies are expected to expand across asset classes within the real estate sector, thereby transforming rent setting from a periodic task into a dynamic lever for growth and operational efficiency.

3. Travel and Hospitality

By analyzing booking trends, competitor pricing, customer behavior, and even local events or weather patterns, AI helps travel companies predict demand and adjust prices accordingly, optimizing revenue during peak periods or luring customers with compelling offers during slower times to maximize occupancy and capacity utilization. Airlines and hotels that have adopted this technology have reported revenue increases and improved occupancy rates, suggesting that AI-driven dynamic pricing is not just a passing trend. Further, AI systems can analyze specific individual customer data, such as browsing history and past bookings, to offer tailored discounts or bundled packages which in turn increases customer satisfaction and loyalty. It also streamlines operations by automating pricing adjustments, freeing up staff to focus on customer service or other strategic initiatives.

4. Logistics and freight

For the logistics and freight industry, where margins are slim and demand can be volatile, AI-powered pricing technology offers a data-centric approach to improving both profitability and operational performance. AI tools enable instant pricing adjustments based on a number of factors including cargo type, delivery deadlines, route efficiency, fuel costs, and fleet capacity.

Generative AI is expected to support 25% of logistics KPI reporting by 2028, enhancing not just the speed of decision-making but also the accuracy of pricing and capacity planning. This capability will allow logistics companies to price services based on supply-demand levels, ultimately helping them improve both load profitability and customer service levels. Moreover, high-performing supply chain organizations are deploying AI-powered solutions at more than twice the rate of their lower-performing counterparts. This cements the industry trend toward algorithmic decision-making, where agile insights enable logistics firms to price shipments based on fluctuating costs, delays, and inventory needs. The result is better load balancing, reduced underutilization, and higher yield per mile or container.

5. Manufacturing

In manufacturing, creating products often involve complex configurations, long lead times, and fluctuating input costs for raw materials, energy, etc. Buyers frequently demand custom quotes based on volume, service levels, and payment terms. In this environment, machine intelligence helps manufacturers manage complexity through algorithmic pricing.

AI-powered tools are being integrated with enterprise systems such as CRM and ERP platforms to analyze historical transactions, forecast commodity price shifts, and segment customers by behavior and projected profitability. These tools can also support dynamic bundling, where pricing for products and services is packaged based on total cost-to-serve rather than simple traditional list pricing. For example, a car parts manufacturer can use AI to monitor steel prices, adjusting the price of affected products within hours to maintain profit margins when costs fluctuate. This immediate responsiveness allows manufacturers to adapt quickly to supply chain disruptions or changes to the availability of materials and components, ensuring that pricing strategies remain profitable and aligned with production capabilities. The outcome is greater pricing control, better resource use, and improved alignment between sales and operational strategies.

6. Wholesale and distribution

Traditionally, pricing in distribution and wholesale domains has been managed through manual processes and legacy software, requiring significant time and personnel investment. These conventional methods often lack adaptability in fast-changing markets. By analyzing historical sales data and market trends, AI can forecast future demand, allowing distributors to proactively adjust their pricing strategies and optimize inventory levels. This not only helps avoid costly stockouts or overstock situations but also ensures that resources are allocated efficiently.

Modern intelligent pricing systems offer a more responsive and scalable approach, enabling businesses to optimize prices in real time and uncover new paths to growth and profitability. For example, “a global B2B petrochemical company captured around $100 million in additional earnings across six business units with a machine-learning-enabled dynamic pricing model. To drive dynamic pricing recommendations, the technology clustered customers into microsegments based on more than 100 characteristics.”

Optimizing offers and incentives

For businesses, AI isn’t just helping set the right price at the right time. It’s also identifying the most effective combination of incentives, discounts, and value-added perks to drive customer action. This shift reflects a growing recognition that customers respond to a range of motivations beyond mere dollars, including incentives such as convenience, perceived value, exclusivity, and timing. Here are a few examples:

In real estate, property managers are using AI models to test whether prospects are more likely to sign a lease when offered reduced monthly rent or an upfront incentive similar to “first month free,” free parking, or waived deposit fees. These systems analyze renter profiles (income, credit, household size), market conditions (vacancy rates, seasonality), and even location-specific competition to determine which offer optimizes both occupancy and revenue.

In the gaming industry, physical casinos and online platforms are leveraging AI to deploy personalized incentives based on real-time engagement data. For instance, during slow periods or when player activity drops, AI models can trigger time-sensitive offers (bonus spins, elevated odds, or loyalty points multipliers). The aim isn’t just to retain players but to increase dwell time and lifetime value by aligning incentives with player habits and preferences.

Businesses serving airline and hospitality industries are experimenting with flexible, AI-generated packages (bundling upgrades, lounge access, or late checkouts with time-limited dynamic pricing). Those booking last-minute travel may receive a different bundle than those booking well in advance, even if the base fare is similar. This form of offer engineering goes beyond pricing strategy and begins to resemble behavioral science in action, identifying psychological cues that increase conversion.

Ultimately, the more holistic approach of combining dynamic pricing with smart offer bundling gives businesses the opportunity to move from simply asking “What price will this customer pay?” to “What experience, offer, or message will prompt action?” The result is a more nuanced, profitable, and personalized engagement model that benefits both the business and the consumer, ultimately increasing customer retention and loyalty as well as near-term profits.

Conclusion

The future of pricing policy will be more than dynamic, it will be intelligent, predictive, and deeply customer centric. Industries that adopt intelligent pricing models will gain the critical edge of understanding customer demand, adjusting to market volatility, and unlocking new revenue possibilities, that traditional methods cannot.

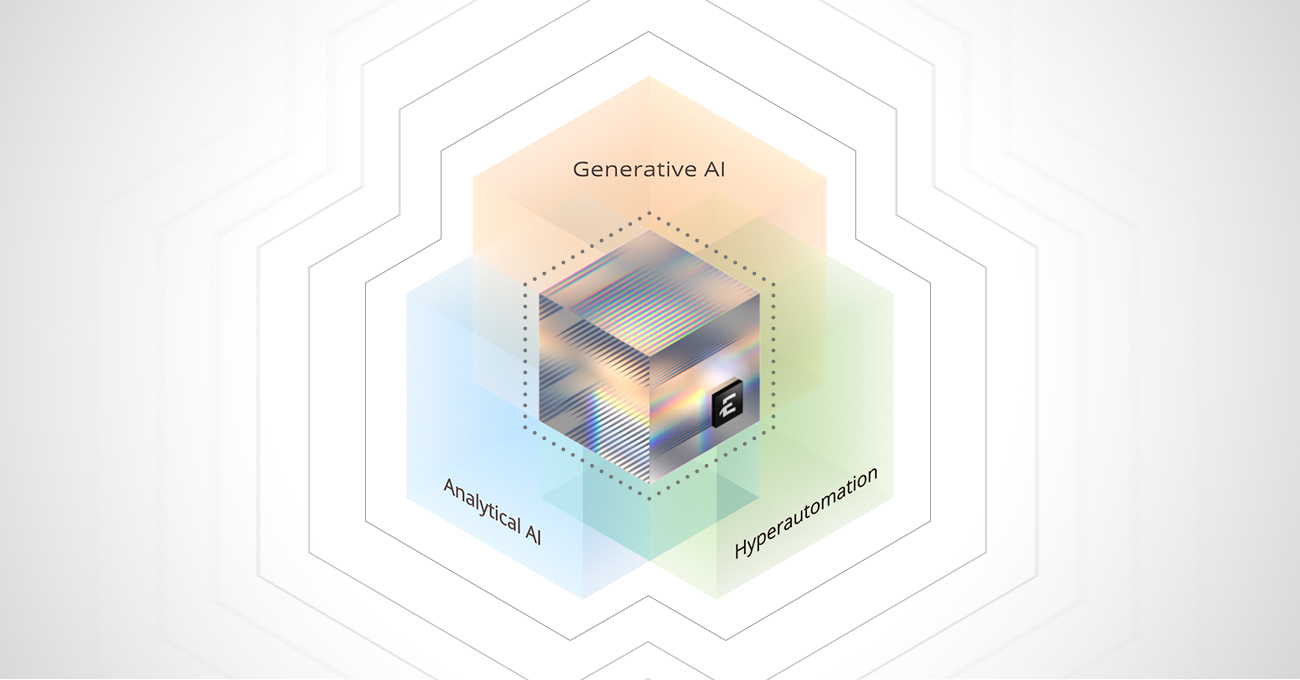

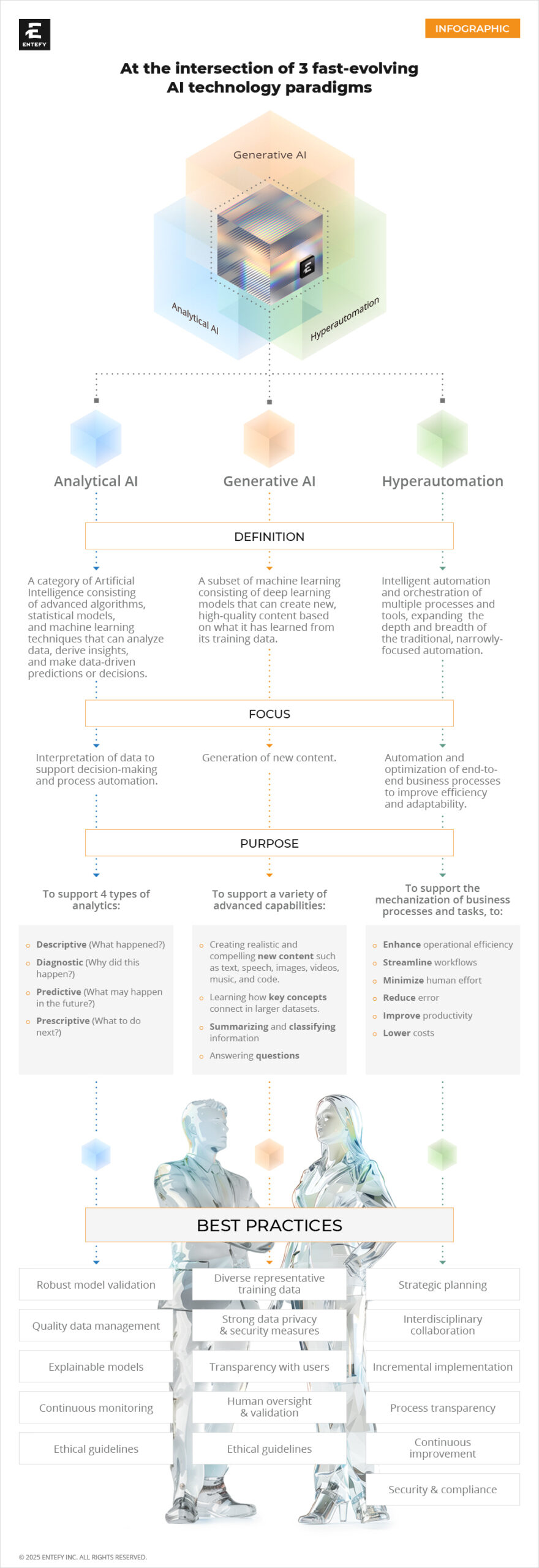

At Entefy, we are passionate about breakthrough technologies that save people time so they can live and work better. The 24/7 demand for products, services, and personalized experiences is compelling businesses to optimize and, in many cases, reinvent the way organizations operate to ensure resiliency and persistent growth.

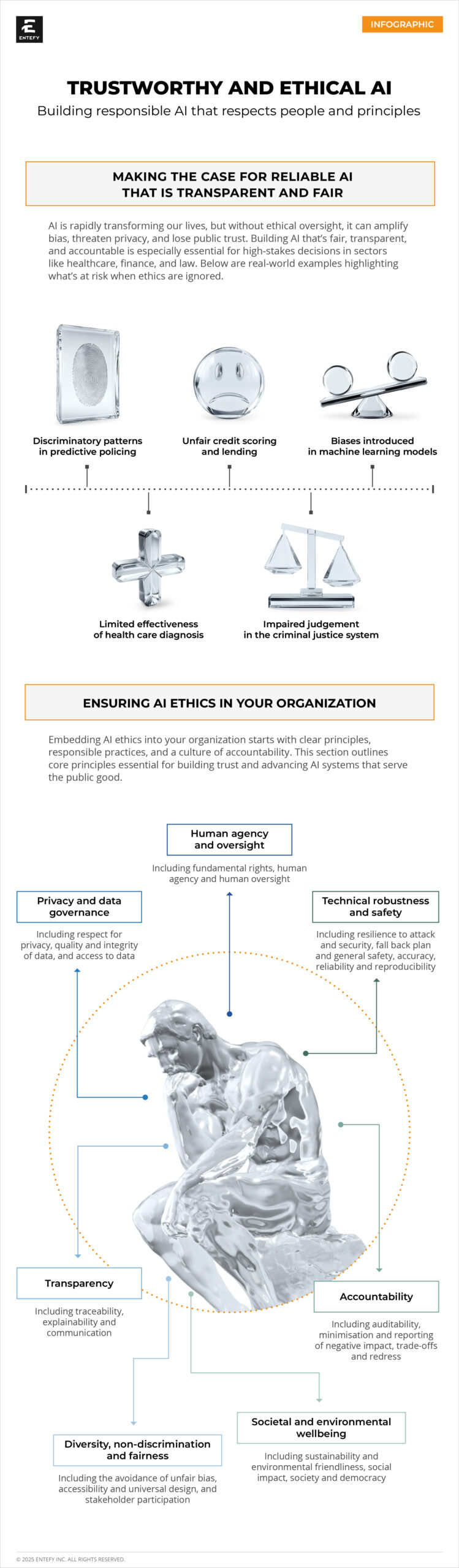

Learn more about the inevitable impact of AI on businesses, the three phases of the enterprise AI journey, and the need for ethical AI.

ABOUT ENTEFY

Entefy is an enterprise AI software and hyperautomation company. Entefy’s patented, multisensory AI technology delivers on the promise of the intelligent enterprise, at unprecedented speed and scale.

Entefy products and services help organizations transform their legacy systems and business processes—everything from knowledge management to workflows, supply chain logistics, cybersecurity, data privacy, customer engagement, quality assurance, forecasting, and more. Entefy’s customers vary in size from SMEs to large global public companies across multiple industries including financial services, healthcare, retail, and manufacturing.

To leap ahead and future proof your business with Entefy’s breakthrough AI technologies, visit www.entefy.com or contact us at contact@entefy.com.