In 1954, the psychologist Leon Festinger infiltrated a cult led by a woman who Festinger dubbed Marian Keech. Keech believed she received messages from an alien race telling her that on a certain date a flying saucer would appear to collect her and her supporters. At which point a catastrophic flood would decimate the remaining population of the earth.

Events didn’t exactly work out that way. But Festinger hadn’t joined the cult to test the validity of Keech’s claims. Instead, he wanted to observe how the cult would react to the discovery that their prophecy had failed. Would they admit the error and change their beliefs?

Festinger is now a mainstay in psychology textbooks due to his theories on cognitive dissonance, which describe a state of mind in which a person holds two or more conflicting thoughts, ideas, or opinions. Cognitive dissonance is by its nature an uncomfortable condition, one that the brain wants to quickly resolve by reinterpreting one or more of the conflicting thoughts. Which lets our brain return to a stable, coherent state.

But of course, reinterpreting facts or beliefs on the fly can be short-sighted. Take for instance the cult that Festinger studied—when the alien apocalypse didn’t materialize, most cult members chose to simply reinterpret what did happen (nothing) as a sign that the aliens had, in fact, saved humanity in response to the cult’s efforts and faithfulness. They opted to restore mental coherency at the expense of truth.

But don’t let the outlandishness of an alien cult fool you, the bias its members exhibited—interpreting the outside world in terms of pre-existing expectations—is something that we all do. In fact, bias can have beneficial aspects as a labor-saving shortcut that lightens the cognitive load on our brain. But bias is also responsible for limiting our understanding of things that are new or different. We’re not really immune from it at home or at work; it can infect how we discover and interpret information, and impact our behavior in workplace settings.

Fortunately, having a greater awareness of confirmation bias gives us tools to limit its shortcomings at work and in life. Let’s start by looking at how confirmation bias works for and against us, explore some of the ways it can surround us in ideological bubbles, and then discuss how we can burst our own personal bias bubbles.

Unbelievable

Beliefs we hold dear feel as though they are an inseparable part of us. But this can lead to problems when new information seems to contradict them, particularly when we’re not prepared to readjust. When we reinterpret an experience to conform with our prior beliefs, we are feeding confirmation bias—we place greater emphasis on information that supports our beliefs while discrediting or ignoring conflicting information.

A meta-analysis of 54 social psychiatry experiments concluded that people have stronger memories of events that conflict with their expectations, yet maintain stronger preferences towards things that support them. So much so that we’ll devote 36% more reading time to attitude-consistent material. When we do encounter opposing information, not only are we likely to try to interpret it in a corroborating way, we sometimes go so far as to use contradiction to strengthen already existing beliefs, something researchers call the ‘backfire effect.’

The confirmation bias does not only influence how we interpret new information, it also helps dictate what we go out looking for in the first place, and what we recall from our memory banks in response to certain questions and decisions.

Take for instance the question “Are you happy with your social life?” It is, on the face of it, basically the same as asking “Are you unhappy with your social life?” The state of someone’s happiness should not be swayed by the words used to ask about it. Yet this is often the case. So those asked if they’re happy will call forth memories of joy, while those asked if they’re unhappy will remember moments of sorrow.

The effects of this associative bias can appear out of nowhere, in the absence of conscious thought. If you’re about to purchase a particular model of new car, you’ll start noticing that car all over the place. Likewise, you might be considering starting a family and begin to see the world around you filled with children. Or go through a breakup and see everyone else traveling in pairs.

If we fail to realize that this is simply a byproduct of our brains seeking efficient means of directing our attention, we end up erroneously assuming that that car is really popular or that there are more kids in the area than there in fact are. This is a classic means of creating and maintaining stereotypes in social interactions, as we tend to see what we expect to see and neglect counter evidence.

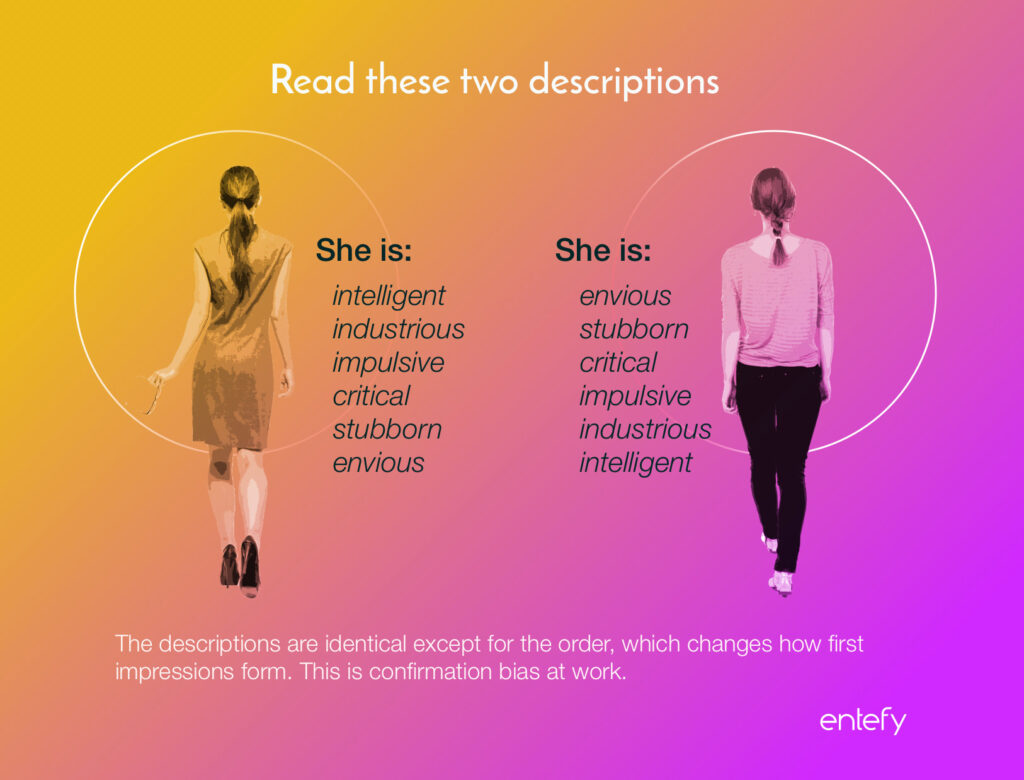

Moreover, research suggests that when we’re forming impressions of a person’s personality, we place greater importance on information learned earlier versus that which we learn later. When asked to form an opinion about someone who is “intelligent, industrious, impulsive, critical, stubborn, envious,” subjects rate the person as more positive than when the same words are presented in the reverse order. Like those pesky first impressions that linger, the information we learn about first becomes the baseline against which new evidence is compared—while we may adjust this baseline, each new piece of information ends up correcting it to a smaller degree. “Intelligent” makes for a better first impression than does “envious,” and while the subsequent adjectives provide some nuance, they don’t overwrite that initial judgement.

Bias is an unfortunate side effect of the brain’s need to reduce strain on its processing capabilities. By referencing past experiences and memories when finding and interpreting new information, we avoid having to start from scratch and can more effectively filter our environment for what’s relevant. But when we let bias run unchecked, we end up with some very unfortunate side effects. Especially when it comes to online news and information.

Information bubbles

Confirmation bias shows up in our news feeds and web searches because we tend to network with like-minded individuals and give more attention to belief-confirming information sources.

While search engines have not been shown to display a heavy bias in their results, the language we use in our searches can implicitly support an assumption or belief. If you believe in astrology and search for the Gemini horoscope, you’re going to find what you’re looking for without seeing (or paying much attention to) information that questions astrology itself. In a subtler sense, a simple comparison of a search for “how confirmation bias affects learning” and another for “does confirmation bias affect learning?” returns front page search results that are markedly different—some were the same, some weren’t.

When it comes to our social media feeds, the ability to personalize the people and brands we follow allows us to form networks that expose us to confirmation bias. Further complicating the issue are recommendation engines, which are designed to show us what the engines think we’ll like and nothing else, minimizing our exposure to information that might provide a well-rounded view or alternative perspective.

What happens if we’re only ever exposed to people and ideas that support our existing beliefs? Those beliefs are reinforced and strengthened by an affirmation feedback loop—each news item, blog post, and status update further demonstrates what we think we already know. And when opposing ideas do manage to sneak in, people tend to quickly label those ideas “exceptions that prove the rule” given that it’s much easier to reinterpret a fact than change a fundamental belief.

Of course, how can one be expected to traverse complex informational landscapes free from this bias? The brain can only process so much information, after all. It takes time and effort to process and internalize new ideas and concepts. Plus, the Internet is filled with fake news to such an extent that it is seldom possible to check the reliability of every source we encounter. And so despite even good intentions, our bubbles grow.

Bursting the bubble

Perhaps the most important and, thankfully, simplest way to battle confirmation bias is to acknowledge that there are many sides to every story. While we may hold a strong opinion, it is but one perspective, of which there may be many more, each with its valid points and arguments. When we allow ourselves a small measure of doubt, we keep ourselves from drawing conclusions too quickly.

A more time-consuming defense against confirmation bias is to act as though you’ll need to explain yourself to someone. Researchers have found that people were more likely to critically examine information and to learn more about ideas if they believed they would need to explain them to another person who was well-informed, interested in the truth, and whose views they were not already aware of. Call it accounting for the effects of accountability.To go even further, get in the habit of playing devil’s advocate—that is, take the opposite view and try to argue from there. It is not always easy to see things from the other side, but then, if you cannot take an opposing perspective seriously, chances are you don’t really understand your own views, and are missing important elements required for a full understanding of the topic.

Getting to the bottom of an issue is an endeavor that requires time and effort, coupled with robust reasoning skills, and as such it is not possible for each of us to get to the bottom of every important topic. But this does not mean we are doomed to suffer at the hands of our biases. If we can simply admit to ourselves that we are missing important elements of the story—that our view is incomplete—we will be more likely to open ourselves to conflicting ideas, and less likely to cling to inconsistent viewpoints.

When it comes to the beliefs we hold dear, we may benefit when we take the time not only to find support for our views, but to discover the contradictions and counterarguments. Time to put ourselves in the other person’s shoes. Time to imagine the outsider’s perspective. Time to resolve cognitive dissonances. And time to purposefully burst our own bubbles of bias.