The evolution of communication throughout history has largely been about taming two dimensions: time and distance. That was as true of spoken language as it was of the telegraph or email. Humans have been so successful at taming these factors that, today, neither time nor distance are meaningful bottlenecks to transmitting your thoughts.

In fact, if you want to tell a friend living in a different hemisphere what you just ate for lunch, the most time-consuming part of the process is physically typing or tapping the message. Communication these days can be so fast that it’s fair to consider it “instantaneous,” though technically it’s not quite there yet. Because with all of the messaging we do in a day, all of that typing and tapping adds up.

We still rely heavily on fingers and thumbs to get the message across, but for how long will this be the case? It’s too soon for anything but speculation, but research groups and corporations are working on new technologies that might allow us to share our ideas using thoughts alone. Flying cars, walking-talking robots, and now sci-fi telepathy? It’s a fascinating time to be alive.

But are we ready for a technology like this? Imagine being able to think a thought around the globe to anyone you wanted. No typing, speaking, or writing required. The technology required to achieve this is already in the labs, with proofs of concept being unveiled with increasing frequency.

The main hurdle to developing this technology is the brain itself, in all its fine and complex detail. So let’s start with a look at what we know (and don’t) about how the brain processes language, then dive into examples of primitive brain-to-brain and brain-to-machine communication devices moving through labs around the world.

Understanding what the brain is saying

Rattling around in the skull are around 100 billion neurons with more than 100 trillion synaptic connections between them, all arranged in a way that is as unique as a fingerprint. That being said, there is also a great deal of commonality between human brains. The look and shape remain relatively consistent, as do the abilities and tasks that certain brain regions specialize in. For instance, the signals from the eyes travel to the back of the brain, where they are decoded and analyzed by the visual cortex. The motor cortex runs from the temple to the top of the head and fires up when body parts need to move.

The commonality between brains has allowed its regions to be reliably mapped—call it human cerebral cartography. The areas corresponding to the tongue and mouth map to the sides of the cortex, near the temple. The area corresponding to the feet can be found at the top of the brain, nestled in between the two hemispheres. And so on.

The complexity of the brain is found not only in the sheer number of neurons but in their variety. There are neurons that respond to specific directions of movement and to certain shapes, for instance. It gets far more complex when we look at neurons related to appreciating art, anticipating chess moves, or converting our ideas into discrete sentences. For these actions, the brain engages in some incredibly complex calculations.

The brain learns how to interpret the world around it as it grows. And, again, this is a highly personalized process. While the brain routes certain types of sensory experience to the same general areas in all brains, the individual determines how we end up processing specific experiences and how we learn to interpret them.

Which brings us to language. The word “cat” won’t be found in the same place in your brain as it will in someone else’s, as you both learned it in a different way and with different experiences. This is an important detail, because brain-to-brain communication in any form requires a device capable of interpreting a given pattern of neurons as a particular idea, thought, or concept. This is an almost inconceivably difficult task.

Neurons are the basis of communication within the brain, but they are just one of the key cell types. In the cortex, where all the higher-level activities that we associate with human thought take place, there are neurons and their constituent parts—long dendrites and axons that can extend to other parts of the brain—along with glial cells and blood vessels. It’s a tightly packed jumble, and any reading and interpreting of its signals must reliably account for all of these elements.

Technology and reading the mind

As it stands today, the best ways to measure brain activity are also the most invasive. For brain-to-brain communication to attain feasibility, other means of interacting with the brain are required. Ideally, the hope would be to spy on the activity of individual neurons without having to open the skull.

One current method for reading brain signals is functional magnetic resonance imaging, or fMRI. This is the familiar large tube that requires a patient to lie unmoving inside it, while it measures the blood flow in certain areas of the brain. It’s cumbersome, crude, and isn’t likely to be useful in brain-to-brain communication.

Another common brain scanning technology is the electroencephalogram, or EEG. This technology measures the activity of an area of the brain using electrodes placed on the skull. It’s not going to read neuronal signals in high detail and, while better than sitting inside a machine, requires the use of a skull cap and cables.

The methods that record brain activity in higher detail are also the most invasive. Typically, they require opening the skull and inserting tiny wires into the brain, which pick up the signals generated by neurons. Which isn’t easy. There is not a lot of structural uniformity in the brain given upwards of 100,000 neurons and other types of cells in a single square millimeter of cortex tissue. And since the brain forms scar tissue around any foreign object it detects, brain receptor devices would need to be designed in a way that convinces the brain these objects are native and natural.

If all of this suggests brain-to-brain communication is practically insurmountable, we’re only getting started. We’ve only focused on one side of the equation—reading signals. If we want another, unique-as-a-snowflake brain to be able to interpret those signals, we would need to be able to stimulate neurons, which creates another set of engineering challenges.

Recent advances are making this less theoretical

But let’s not count science out, or call this concept a pipe dream. Because there are already working examples of some potential precursor technologies.

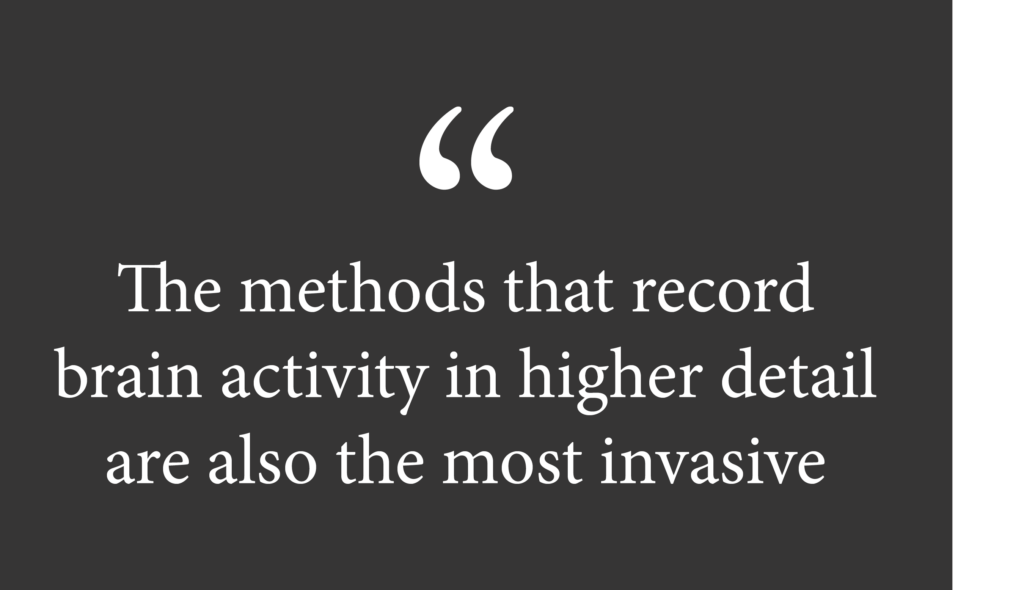

One such idea is neural lace, which is a fine mesh containing electrodes that can be injected into brain tissue. The neural lace is rolled up and placed in a tiny glass syringe, and expands once released into the desired area, where it can then record surrounding activity.

Syringe-injectable electronics like neural lace have been successfully implanted in rodents. The researchers applied the lace to two areas of an anesthetized rat’s brain, including the hippocampus, an area central to forming memories. Once there, it was able to record neuronal activity and, equally promising, it didn’t prompt an immune-system response.

While installing neural lace would still require an invasive procedure, the study’s authors noted, “Compared to other delivery methods, our syringe injection approach allows the delivery of large (with respect to the injection opening) flexible electronics into cavities and existing synthetic materials through small injection sites and relatively rigid shells.”

Another brain interface concept is neural dust—dust-sized silicon sensors that can read activity and stimulate nerves and muscles. The devices rely on ultrasound generated by a small external device. Ultrasound can relay information between the dust and external devices wirelessly, and can reach almost anywhere in the body. What’s more, the dust can convert ultrasound vibrations into electricity that can then power an on-board transistor, eliminating the need for batteries while maintaining the ability to stimulate nerve fibers.

Researchers estimate they can shrink ultrasonic, low-power neural dust down to half the width of a human hair. As of now neural dust devices are capable of functioning within the peripheral nervous system, but further advances will need to be made before they are small enough to use in the brain.

Not without their limitations, these technologies hint at the possible improvements that can be made to current methods. While it may be some time before we develop true brain-to-brain communication, a few game-changing innovations might be just around the corner, and a few key pieces are already in place.

The current state of brain-to-brain communication

There are two sides to the brain-to-brain communication story: reading the signals from one brain and introducing them comprehensibly into another. We have working examples for each side of the equation.

For instance, cochlear implants convert sound signals into electrical signals, which by way of an electrode array can stimulate the auditory nerve within the ear, allowing people to hear. A similar process happens in retinal implants, which sit at the back of the eye and stimulate the nerves in response to light signals. The nerves within the eyes and ears are, however, quite different from those in the cerebral cortex, both in terms of complexity and accessibility.

There are people who have lost limbs, or lost the use of their limbs, that have learned to control robotic arms or mouse cursors. Juliano Pinto, for instance, became a paraplegic after a car accident in Sao Paulo, but thanks to a large exoskeleton and an EEG cap, he was able to kick a football for the opening of the 2014 World Cup.

When it comes to sending signals to other brains, there are a few working examples. For instance, one non-invasive brain-to-brain method was demonstrated in an experiment that successfully allowed a man’s thoughts to wiggle the tail of an anesthetized rat. When the man thought a specific thought, the signal would stimulate an area of the motor cortex in the rat, which caused its tail to swing.

This is a long way from transferring language and ideas, of course. In that realm, an experiment with human subjects took place in which two people had their brain signals for “hola” and “ciao” converted to binary and transmitted between India and France. The method was cumbersome, however, as the receiver would experience the signal as flashes of light in the corner of their vision, not as the actual word being transmitted.

Movement appears to be an easier activity to transfer between brains, as the neuronal signatures are easier to spot and interpret. Language is complex and detailed. Most adults have an approximately 42,000-word vocabulary, which is a lot of neuronal signals to be interpreted if we’re going to start having brain-to-brain conversations—and that’s before the added complexities of context and humor and sarcasm.

We’re not quite ready for this

There’s something magical about the brain that makes it more than just another engineering challenge. So perhaps it’s the sci-fi appeal of transmitting messages with our thoughts that has our collective imaginations running wild. But there are also some deep challenges to us humans adopting a technology like this.

Take just one consideration: identity. Consider that having another person’s voice not heard but experienced in your mind just as if it were your own thoughts, could have striking effects on your sense of self and identity. If your thoughts merge with everyone else’s thoughts, where does your “I” begin and end? Not to mention all the opportunities for hacking and spying and other hijinks.

There’s also the chance that we will have these mental conversations not just with other people, but with bots and machines. If we can call upon the wealth of knowledge available on the Internet and have it naturally communicated to us as easily as recalling a memory, we may see it—perhaps legitimately—as an augmentation and extension of our selves, not of some external source of information. If we think fake news and spam are a problem now…

Are we at all prepared for such a fundamental change to communication itself? How quickly can people adapt to such an ability in ways that are positive and healthy for the individual and society? For now, and for a long time to come, these ideas will remain kindling for the fire of imagination. But it’s a blazingly fascinating fire.