If ever there was a case of “greater than the sum of its parts,” you’ll find it with Entefy’s multimodal AI, the machine learning paradigm where computer vision, natural language, audio, and other data intelligence work together to generate advanced capabilities and insights.

As people, we experience the world using a number of senses or modalities that are beautifully integrated to create a holistic understanding of the world. From touch to vision to hearing, our ability to learn is based on a complex, yet seamlessly integrated cognitive process. Much of the success in machine learning so far has been based on learning that involves only a single modality. Good examples include text, audio, or images. But for AI to achieve much better performance and understanding it needs to get better at dealing with cross-modal learning, involving diverse data types. What’s important here isn’t simply the mechanics but rather the artful interpretation, fusion, disambiguation, and knowledge transfer needed when dealing with the multiple modalities and the models that evaluate them.

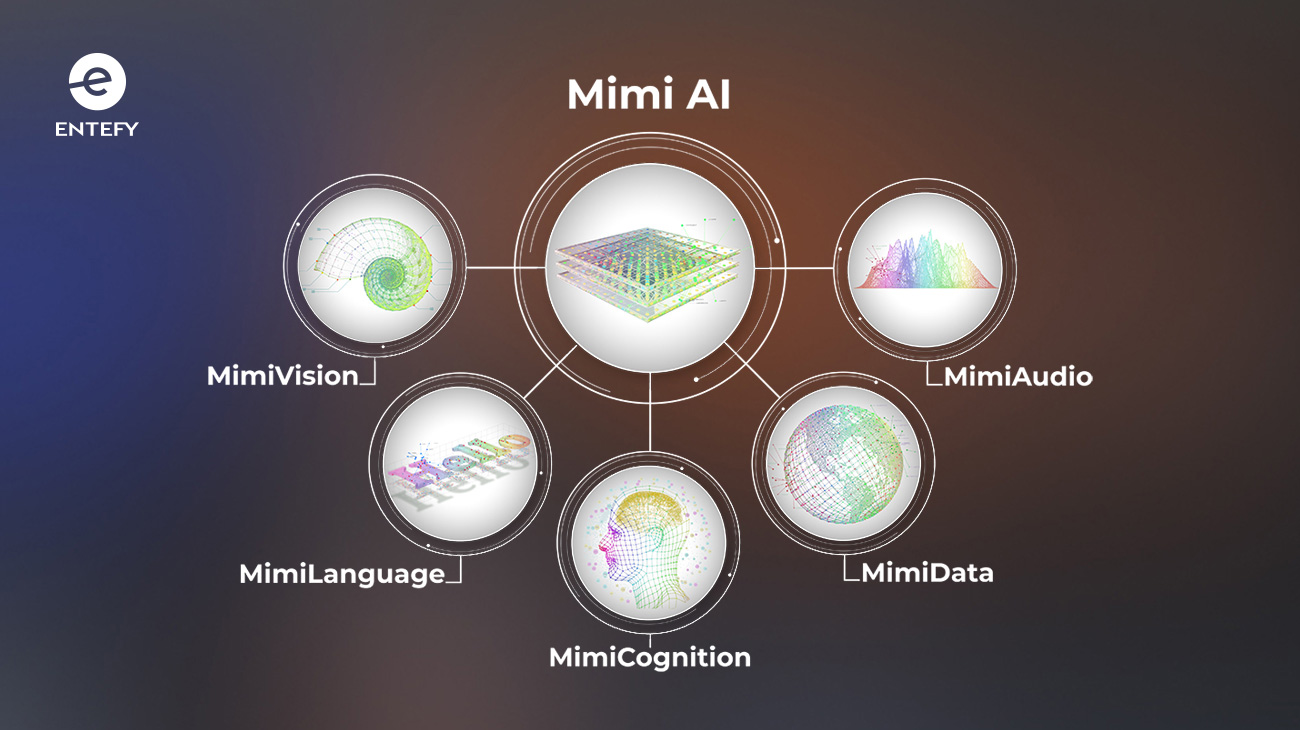

To see just how powerfully effective the multimodal approach can be, check out this overview of Entefy’s Mimi AI. In this video, Entefy’s Co-Founder Brienne Ghafourifar provides a guided tour of the Mimi platform and hints at the valuable use cases Mimi makes possible.